Poker Neural Net

Posted : admin On 4/16/2022

- A reinforced Learning Neural network that plays poker (sometimes well), created by Nicholas Trieu and Kanishk Tantia The PokerBot is a neural network that plays Classic No Limit Texas Hold 'Em Poker. Since No Limit Texas Hold 'Em is the standard non-deterministic game used for NN research, we decided it was the ideal game to test our network on.

- The training data are game histories, and the output is a neural network that estimates the gain/loss of each legal game action in the current game context. We believe that this data-driven approach can be applied to a wide variety of poker games with little game- specific knowledge.

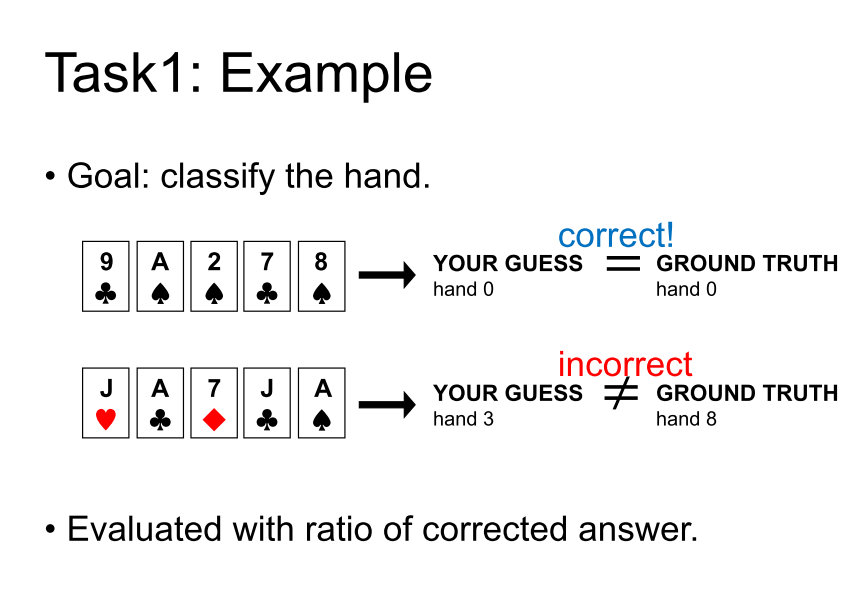

- The objective is to train the neural network to predict which poker hand do we have based on cards we give as input attributes. First thing we need for that, is to have a data set.

Free poker - free online poker games. 247 Free Poker has free online poker, jacks or better, tens or better, deuces wild, joker poker and many other poker games that you can play online for free or download.

DeepStack, one of many recent computers to face off against human beings, defeated 11 professional poker players in heads-up no-limit hold’em, according to a study published in Science this month.

Poker Neural Network Tutorial

Of the 11 players, DeepStack defeated 10 of them in December 2016 by statistically significant margins after the study authors had the computer undergo deep learning training to teach the bot to develop poker intuition for any situation.

The computer looked up two copies of the same network in its neural network, namely for the first three shared cards and then again for the final two, trained on 10,000 randomly drawn poker games, reported Ars Technica.

The researchers recruited 33 players through the International Federation of Poker.

Only 11 players finished 3,000 matches over the course of a four-week period and DeepStack’s neural networks were what allowed it to essentially “learn” and model higher-level concepts while it ran on a gaming laptop (NVIDIA GTX 1080). DeepStack was developed by researchers at the University of Alberta and a number of Czech universities.

DeepStack works through situations as humans would, learning pieces of the game as it goes and create a strategy to defeat the humans.

“In some sense this is probably a lot closer to what humans do,” said Michael Bowling, professor of machine learning and the study author, to Scientific American. “Humans certainly don’t, before they sit down and play, precompute how they’re going to play in every situation. And at the same time, humans can’t reason through all the ways the poker game would play out all the way to the end.”

DeepStack isn’t the only artificial intelligence out there. Carnegie Mellon’s Libratus recently beat four professional players with a more elite status on a supercomputer. Its technology is similar to that of DeepStack in the later stages of computing but it does not use the same neural networks, according to Scientific American. DeepStack also won by larger margins.

Past attempts with Claudico didn’t pan out, but Google DeepMind’s Alpha Go beat pros at the game, go. Even more notably, 20 years ago, Deep Blue beat World Chess Champion Garry Kasparov at his own game.

Poker Neural Network

This finding reveals a lot about artificial intelligence’s ability to master imperfect information games beyond abstraction (or computing how to play in every situation before the game begins).

Lead image courtesy of Thigala shri/Flickr

Poker Bot Neural Network

Tags

Poker PlayersAI